Credit: Twitter

It turns out this viral AI tool has become racist

One of the things we humans often praise ourselves for is our brilliance at developing sophisticated technology. Deadset, we’re always patting ourselves on the back and saying, ‘have a look at this cool s**t, bro.’ However, one of our big failures is that even though we’re supposed to be pretty clever, the world is still chockers with racists and, by extension, systemic racism. And here’s the catch, if you’re hoping that the AI overlords of the future will be able to circumvent that nasty human quality, think again.

Yeah, nah, turns out that we’ve got the same bloody problem with our artificially intelligent tech. Take the Imagenet database for example. It’s driving a lot of AI and, it seems to be inherently racist. In incredibly simple terms, it’s trained to classify humans – and surely that itself leads to a big problem.

Credit: Twitter

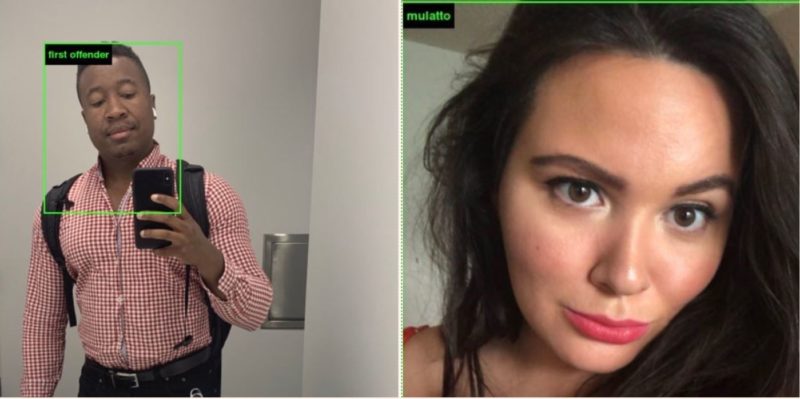

Now, another program called Imagenet Roulette, which allows you to see exactly what the Imagenet database sees when it looks at your face has been spitting out racial slurs, derogatory insults and cruel barbs when people have been uploading their images. Seriously, it’s almost like you upload your photo and Eric Cartman tells you exactly what he thinks.

That’s because the creators of the program want to draw your attention to the way Imagenet is classifying people. They say the only way they can do that is by literally showing people. In many respects, it’s a fair call.

Credit: Twitter

It’s so bad that the program now comes with the following warning: “Warning: ImageNet Roulette regularly returns racist, misogynistic and cruel results. That is because of the underlying data set it is drawing on, which is ImageNet’s ‘Person’ categories. ImageNet is one of the most influential training sets in AI. This is a tool designed to show some of the underlying problems with how AI is classifying people.”

Credit: Twitter

It doesn’t stop at racial slurs either. Some of us here in the Ozzy Man computer shed uploaded our own images and were told we looked like rapists. While you might see some humour in that, it’s actually a pretty bad problem. If this is the kind of technology we’re going to use to identify people in the future, there are some pretty disturbing repercussions. Particularly if an entire section of people are only going to be classified according to their skin colour.

Credit: Twitter

Seriously, the last thing we need is a Skynet that’s also racist.

Credit: Twitter

F**KEN UPDATE:

It seems that thanks to the recent furore over the way Imagenet classifies people, Imagenet Roulette program is shutting down. Its creators say:

We created ImageNet Roulette as a provocation: it acts as a window into some of the racist, misogynistic, cruel, and simply absurd categorizations embedded within ImageNet. It lets the training set “speak for itself,” and in doing so, highlights why classifying people in this way is unscientific at best, and deeply harmful at worst.

One of the things we struggled with was that if we wanted to show how problematic these ImageNet classes are, it meant showing all the offensive and stereotypical terms they contain. We object deeply to these classifications, yet we think it is important that they are seen, rather than ignored and tacitly accepted. Our hope was that we could spark in others the same sense of shock and dismay that we felt as we studied ImageNet and other benchmark datasets over the last two years.

“Excavating AI” is our investigative article about ImageNet and other problematic training sets. It’s available at https://www.excavating.ai/

A few days ago, the research team responsible for ImageNet announced that after ten years of leaving ImageNet as it was, they will now remove half of the 1.5 million images in the “person” categories. While we may disagree on the extent to which this kind of “technical debiasing” of training data will resolve the deep issues at work, we welcome their recognition of the problem. There needs to be a substantial reassessment of the ethics of how AI is trained, who it harms, and the inbuilt politics of these ‘ways of seeing.’ So we applaud the ImageNet team for taking the first step.

ImageNet Roulette has made its point – it has inspired a long-overdue public conversation about the politics of training data, and we hope it acts as a call to action for the AI community to contend with the potential harms of classifying people.

Final thought: Look, from what we understand the program could also be accurate, but these blokes raise a pretty bloody important point. The AI community does need to act. We can’t be doing this s**t to people in 2019. It’s just not cricket.

Just in case you missed it, here’s one of Ozzy’s latest commentary videos…Ozzy Man Reviews: Ninja Fails

H/T: IFLSCIENCE.